|

Abhijeet Nayak I am an ELLIS PhD student at the University of Technology in Nuremberg, under the supervision of Prof. Wolfram Burgard. My primary research focus is on applying foundation models to robotics, particularly in robot navigation and manipulation. I earned my master’s degree in Computer Science from the University of Freiburg. During my time there, I worked with the Robot Learning Lab on radar localization using prior LiDAR maps. I also served as a research assistant with the Computer Vision Group, where I focused on object manipulation for robotic arms. Prior to my master's studies, I was part of the vehicle localization team at Mercedes-Benz R&D India, developing lane-level localization algorithms for the Drive Pilot system. These algorithms are now deployed in production-level Mercedes-Benz S-Class vehicles. |

|

Research |

|

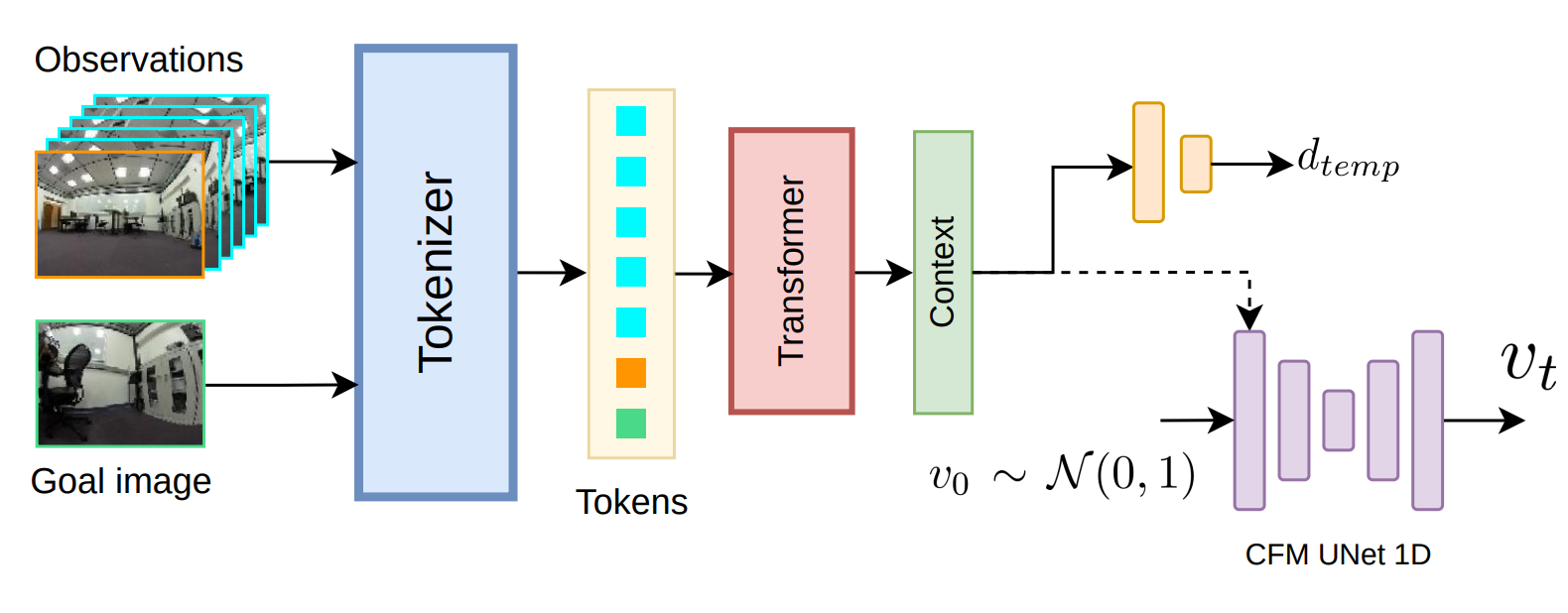

FlowNav: Learning Efficient Navigation Policies via Conditional Flow Matching

Samiran Gode*, Abhijeet Nayak* Wolfram Burgard Learning Effective Abstractions for Planning (LEAP) Workshop, CoRL, 2024 Differentiable Optimization Everywhere: Simulation, Estimation, Learning, and Control (Workshop), CoRL, 2024 arXiv Learning actions for robot navigation using Conditional Flow Matching. With a faster inference time, FlowNav is ideal for environments with dynamic objects. |

|

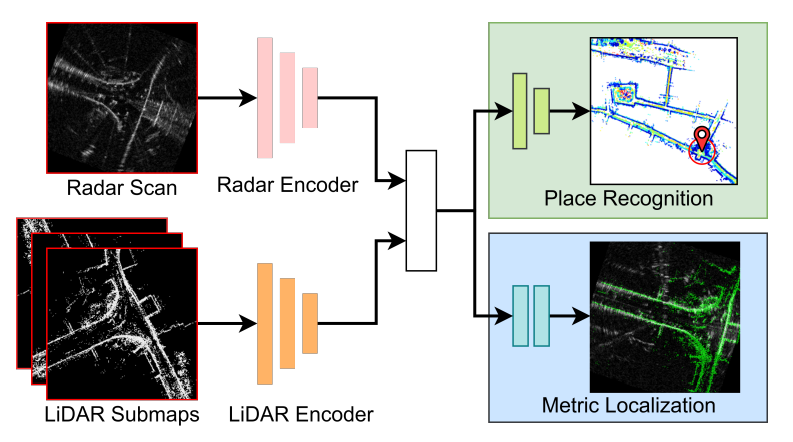

RaLF: Flow-based Global and Metric Radar Localization in LiDAR Maps

Abhijeet Nayak* Daniele Cattaneo*, Abhinav Valada International Conference on Robotics and Automation, 2024 project page / arXiv / video / code Global and metric localization of radar data on a prior LiDAR map of the environment. Place recognition is used for global localization, whereas optical flow between the radar image and lidar submap is used for metric localization. Our method outperforms prior methods on unseen datasets. |

|

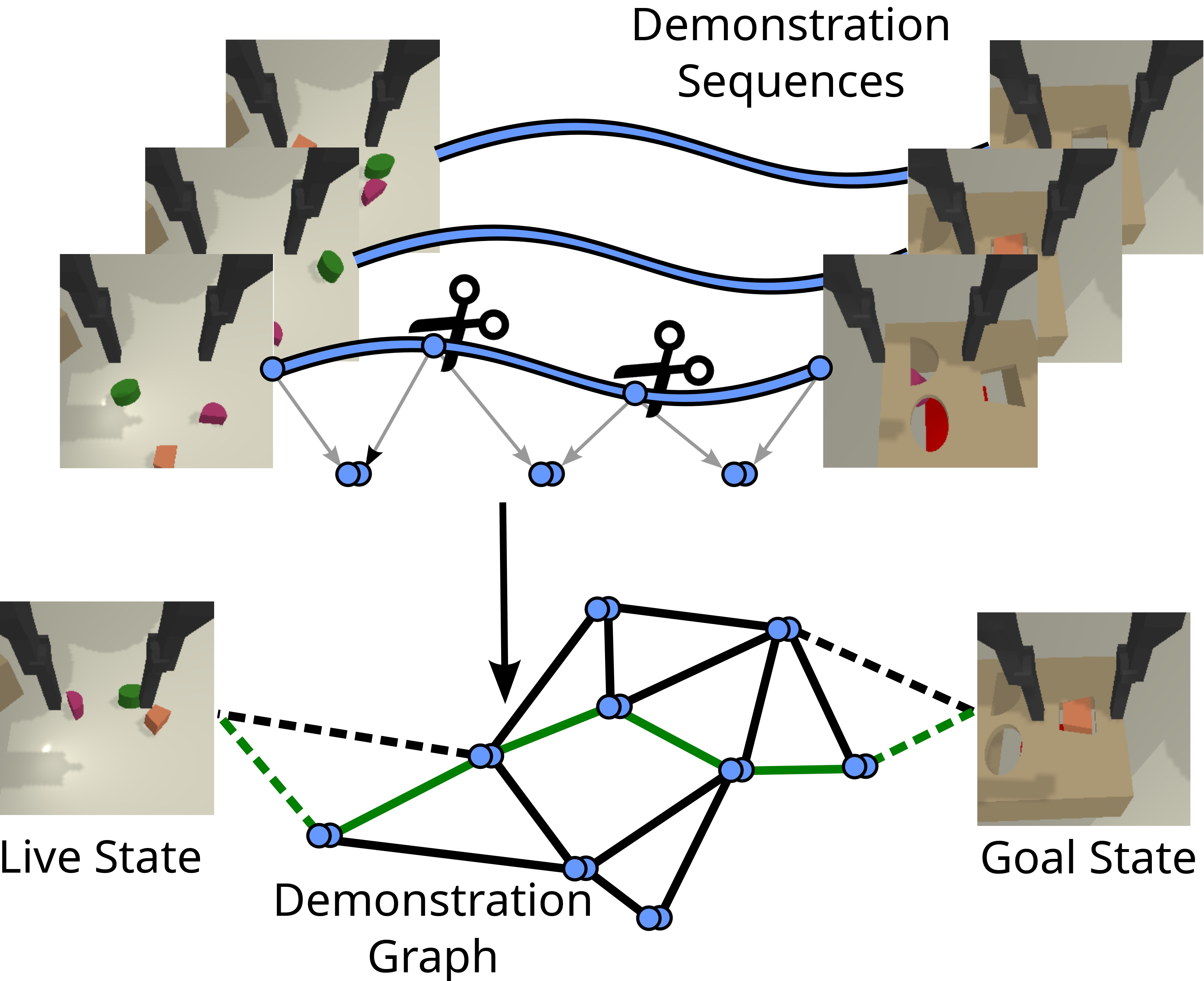

Compositional Servoing by Recombining Demonstrations

Max Argus*, Abhijeet Nayak*, Martin Büchner, Silvio Galesso, Abhinav Valada, Thomas Brox International Conference on Robotics and Automation, 2024 project page / arXiv / video / code Demonstrations collected for an object manipulation task can be reused for other tasks. Here, we reuse parts of different demonstrations sequentially to show that object manipulation skills can be transferred between tasks. |

|

Evaluation of Fully-Convolutional One-Stage Object Detection for Drone Detection

Abhijeet Nayak*, Mondher Bouazizi*, Tasweer Ahmad*, Artur Gonçalves, Bastien Rigault, Raghvendra Jain, Yutaka Matsuo, Helmut Prendinger Image Analysis and Processing (ICIAP) Workshops, 2022 paper In the Drone-vs-bird detection challenge, we employed the Fully-Convolutional One-Stage (FCOS) network for drone detection. To enhance detection accuracy and minimize false positives, we applied data augmentation techniques, which strengthened the model's robustness against drone-like objects. As a result, our improved detection performance secured us third place in the challenge. |

|

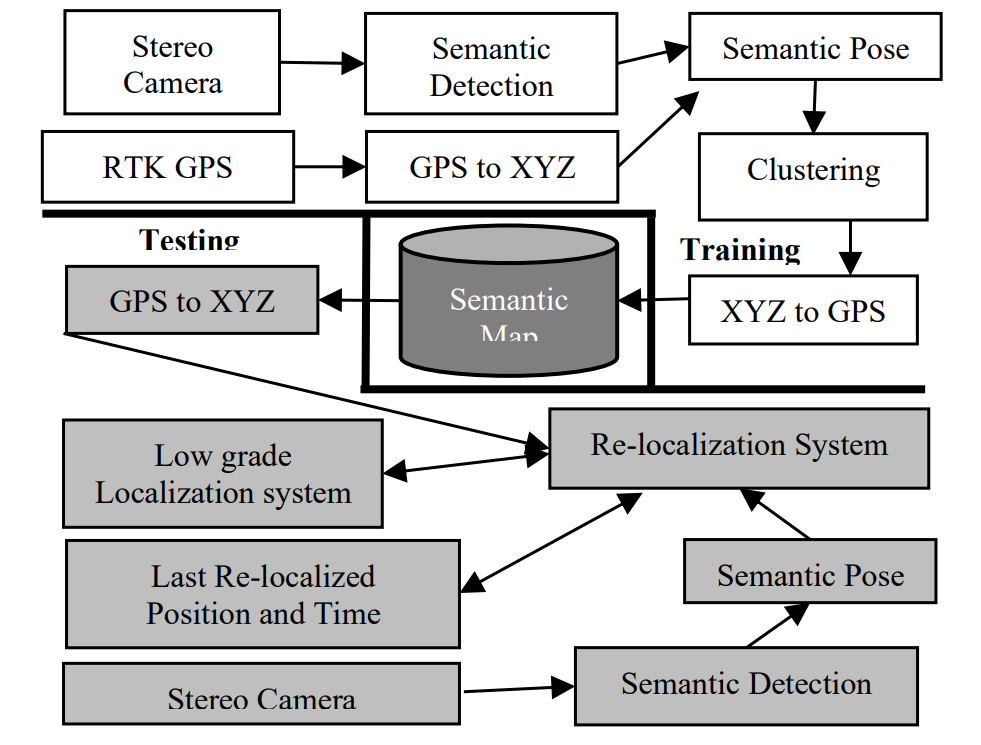

Re-localization for Self-Driving Cars using Semantic Maps

Lhilo Kenye, Rishitha Palugulla, Mehul Arora, Bharath Bhat, Rahul Kala, Abhijeet Nayak International Conference on Robotic Computing, 2020 paper We propose a vehicle re-localization algorithm that uses a semantic map of the environment for localization. The semantic map consists of landmarks detected along the path of the vehicle. |

|

Stole this website from Jon Barron. |